Sepupus, the internet has been abuzz of late because of a new MIT study called “Your Brain on ChatGPT”.

All around on Reddit and the internet, people are starting to form wild conclusions, read patterns in the stars, decide unilaterally or with the agreement of some people out there and everywhere, that somehow now people are being made stupid and MIT researchers have said that it is so and therefore it must be true.

I find it interesting and fascinating.

Now, in what way is this related to economics if at all?

Well, artificial intelligence is a very important part of our economy and it will continue to be important for the foreseeable future, as it shapes and reshapes the economy and how we treat human capital in ways that are intuitive and sometimes unintuitive, in ways more subtle and interesting than the standard narrative of robots replacing human beings may suggest.

It’s interesting to think about it and how it’s going to affect the way that we can live and work in this world which is ever-changing and continually evolving. With that in mind, here’s my perspective.

I do not generally think that ChatGPT is making us stupid.

I read the MIT study earlier, and I broadly understand the way that it is constructed.

You can have a look at it here.

Link: https://arxiv.org/pdf/2506.08872

Basically, what they did was that they asked participants to write SAT-style essays across three sessions chosen from a range of choices in three different groups:

1. One purely using their brains

2. One using Google

3. One using ChatGPT

Then, they had some participants come back for a fourth session where they swapped people from one group to another — 18 people did this in total.

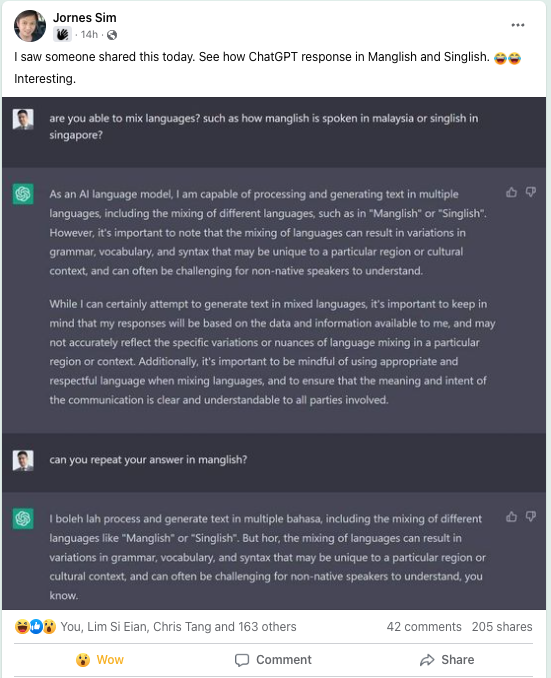

Now this is what ChatGPT says, in summarizing what happened:

(AI generated – also, as a full disclosure, I do use AI-generated content on this website once in a while; consider this a disclosure that you may see AI generated content here once in a while, although I affirm that I will curate it to ensure that it is high quality and it is accurate and matches experience. I hope you won’t mind as what matters more I think is the specific choice of what to show to you rather than the question of whether the content is generated by AI or if it is not!)

What the Study Did

The researchers wanted to understand how using ChatGPT-like tools (called LLMs, or large language models) affects your brain and your essay writing.

They divided participants into three groups:

- LLM group — people who used ChatGPT to help write their essays.

- Search Engine group — people who could use Google to help them.

- Brain-only group — people who weren’t allowed to use any tools; they just used their brains.

Each person wrote three essays under their assigned condition.

In Session 4, they mixed things up:

- People who had used ChatGPT before were asked to now write essays without it (LLM-to-Brain).

- People who had never used ChatGPT were now allowed to (Brain-to-LLM).

Only 18 participants completed this fourth session.

What They Measured

They used several ways to assess the participants’ thinking and writing:

- EEG (electroencephalography): This measures electrical activity in the brain. They looked at brainwaves to see how engaged or active the brain was.

- Essay analysis: They checked the essays using Natural Language Processing (NLP), human teachers, and anAI-based scoring system.

- They also looked at how similar or different the essays were (in terms of topics, words used, named entities, etc.).

- Self-reports: They asked participants how much they “owned” or felt connected to their writing.

What They Found

🧠 Brain Activity:

- Brain-only group had the strongest and most widely connected brain activity. Their brains were working hard and across many areas.

- Search Engine group had moderate brain engagement.

- LLM users had the least brain activity and the weakest connectivity — indicating low mental effort.

- When LLM users switched to Brain-only, their brain activity stayed low. It was as if their minds were still in “autopilot” mode — under-engaged.

- When Brain-only users switched to LLM, they had high activity in memory and visual/spatial reasoning areas — kind of like how Google users behaved.

📄 Essay Quality and Similarity:

- Essays from each group became more similar within their group — especially in wording and topics. LLM users’ essays were more homogeneous.

- LLM users had the lowest sense of ownership of their essays and often couldn’t remember or quote what they had written.

- Brain-only users had the highest sense of ownership and memory of their writing.

⏳ Long-Term Effects:

- Over 4 months, the people who used LLMs consistently:

- Had weaker brain engagement

- Wrote more similar, less original essays

- Felt less connected to their work

- This suggests that relying too much on ChatGPT may make people less mentally engaged and less able to learn deeply.

Bottom Line (I disagree with this)

- LLMs like ChatGPT make writing easier, but they might also reduce mental effort and learning.

- This has serious long-term implications for education, especially if students use LLMs without actively thinking.

- The study doesn’t outright say ChatGPT makes you stupid, but it shows that heavy dependence on AI tools may hinder cognitive growth and originality over time.

Alright, no more AI.

I disagree with the interpretation, and I’ll tell you why.

It seems simple and intuitive to conclude that ChatGPT is making people stupid because of the lowered brain activation in the people who use LLMs over the period of several months.

However, in my mind, there are several problems with that, and it is good that the authors of the study acknowledged limitations and also the need for people to conduct more extensive studies, even as they note that there was no choice of LLMs, the participants were all recruited from nearby universities and were not a diverse sample, and the task in itself was a narrow one.

Firstly, as the researchers admitted, this task was specifically related to essay generation with a limited set of topics.

Secondly, the observed drop in brain connectivity cannot be meaningfully and purely attributed to a decline in cognitive performance but can also be attributed to a reduced engagement in the tasks.

For instance, people who used ChatGPT may not have been so absorbed with their first, second, and third essays and therefore when they came to the final task, they may have just come in with no strong feelings whatsoever.

This can be interpreted as a decline in cognitive performance, but should it be interpreted as such?

The researchers do not tell us, and it is probably something that they did not really look into in the context of this study.

Let’s also now consider another broader point about intelligence at large, now in a Sepupunomics context.

While working memory and brain connectivity might be taken as indicators of intelligence, it is unclear that they are the sole indicators of intelligence — That lack of connectivity or engagement indicates a lack of intelligence.

In fact, what we consider ‘intelligent’ now has changed drastically relative to what we used to understand as intelligent, and given the fluid nature of intelligence throughout the course of history, we have no reason to suppose that the future should be static or unchanging — or that connectivity or engagement in this context indicates the presence or absence of intelligence in a person.

Intelligence in every era has always been defined relative to outcomes that we consider to be valuable or worthwhile; as Naval Ravikant has observed, and I paraphrase, the intelligent man or woman is the one who gets what they want out of life.

In every generation, social and economic conditions have changed, and human beings and our brains have adapted and evolved in relation to those social, economic, and material conditions.

Accordingly, the jobs and the tasks that we now consider valuable have also vastly changed compared to the past – what is valuable human capital, or…

Human capital: the value employees bring to a company that translates to productivity or profitability, and more loosely, the value that human beings bring into an organization (whether a company, a nation-state, or the world) that translates into benefit to the world.

Automated autogates have replaced the toll booth operators that used to sit there lazily one after another, and the ATM has made it so we speak to bank tellers only when there are special circumstances that we cannot deal with; the job that we call a farmer now varies across countries and civilizations and can still in fact mean the small holder carrying a hole and wearing galoshes, or it can mean the grand scale tractor fleet operator running cloud seeding of operations with artificial intelligence.

For many reasons that include these changes in technology, the jobs of our era have changed, the demands of employers have changed in relation to what they need because of how different skills are now required in this era, and what we call or consider valuable human capital has changed – This is not theoretical. It is already happening and it has happened for years, and will continue to happen in the years to come.

Coincidentally, I was speaking with some students earlier and telling them about how nowadays it has become normal and uncontroversial that people no longer remember phone numbers anymore… But that’s not a bad thing, and neither does it indicate that people have become stupid because now they cannot remember phone numbers.

Rather, it hearkens to the fact that now, what is called cognitive offloading is a possibility – because technology now permits it, we can use phones as external cognitive storage for us, thereby freeing us from dedicating those cognitive resources towards memory. In our modern social context, it would be the person who cannot operate a phone who would likely be considered “stupid”, not the person who cannot remember a phone number but can retrieve it from their device.

The same thing has happened with directions — These no longer preoccupy so many of us because Google Maps has now replaced the need for us to consult physical maps and then discover how to go to certain places, even though that isn’t universally doable and won’t always work with all locations.

My Assessment:

Given the limited nature of the task, the possible alternate interpretations of the data, and the fact that intelligence can certainly be defined in other ways, I cannot conclude that there is a causal impact between usage of ChatGPT and a drop of intelligence or increase in stupidity.

In lieu of that, and in critiquing the study, I would say that ChatGPT allows us to participate in the world in new and different ways, which some might argue is reflective of heightened intelligence and that was not accounted for by the study.

Note for example that the study task is something that very much does not represent best use of ChatGPT — merely using ChatGPT in order to generate essays and then copying and pasting the contents in order to create a Frankenstein creation that the creator, so to speak, had no knowledge of and only was able to appreciate on a surface level.

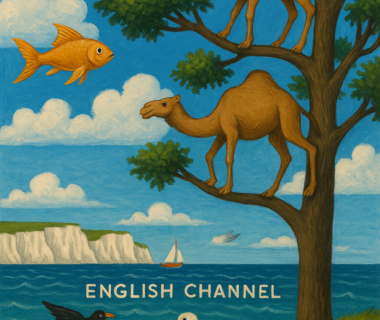

We are perhaps aware that we should not ask camels to climb trees, fish to fly in the skies, or birds to swim across the English Channel.

That would be absurd and it would be entirely illogical.

It is fun to visualize, though!

In the same way, I think it is silly to suppose that we should evaluate people’s brain waves on ChatGPT and then come up with easy conclusions about whether they have become smarter or dumber when in the very first place, using ChatGPT in that particular situation was analogous to all of the somewhat colourful examples I had provided earlier.

With ChatGPT, people have the ability to explore a very wide range of topics very quickly.

They have the ability to confirm their assumptions, assess their own thinking, ask questions that people would never ask under normal circumstances, and then figure out whether they were correct or if they were wrong and obtain directions for future research.

This cycle of confirmation and disconfirmation, research, understanding, analysis, and synthesis is extremely quick — but none of those things or the ways that they may relate to ‘intelligence’ of the new era is really tested under the condition of being asked to choose a single essay and write it, and none of this is accounted for under the conditions that the researchers placed the participants.

As acknowledged by the researchers, the results that we saw were highly context-dependent — If intelligence is, as Mr. Ravikant said, getting what you want out of life, it seems almost a little silly to imagine that a study involving writing an essay would generalize to the entirety of life and the vast array of situations outside of ChatGPT that a person could ostensibly use it for.

We as laypeople may come up with a hundred misconceptions of what the results may show or what they may show or may not show, and it is entirely a person’s right to talk about what happened to their mother/father/sister/brother/irresponsible child/precautious baby using ChatGPT or anything else they like…

But the capital-T Truth remains out there and definitely should be a subject of investigation for the future.

Conclusion:

We cannot unambiguously conclude that.ChatGPT inherently makes you stupid or make you smart — Certainly not from the study. The authors affirm this as well, and the truth, as it turns out, remains a matter of opinion.

Here is mine.

I would not guess that that capital T truth is that with respect to how our society defines or will redefine intelligence at a later state, in consideration of the ocean change that AI is bringing to our world, that people who are using ChatGPT are becoming more stupid; after all, (and this is AI) Malaysia’s MyDIGITAL blueprint and Singapore’s AI governance frameworks both acknowledge that productivity in the 21st century isn’t just about raw mental horsepower — it’s about tool fluency, adaptability, and strategic attention.

Like any other tool, ChatGPT can make you stupid or it can make you smart, depending on how you choose to use it — Calculators can certainly make you dumb if you repeatedly bash them against your head and end up rewiring your brain the wrong way. I suppose, although that’s not really something that people use calculators for, even when we relied upon them. Perhaps we made up for what we lost in arithmetic skills with a greater and vaster exposure to problems that we would never have encountered.

This doesn’t exclude higher levels of talent from emerging within the system as outliers that stand beyond the calculator, the pen, the paper, and certainly ChatGPT – And it also doesn’t exclude the possibility that because of AI and the way that all of us are using it and living through it in this era, that even the paradigm of what we consider worthwhile to teach and to learn both in economics and in life will change along the way.

On my part, I am pretty confident that I have become smarter than I otherwise would have been now as a result of ChatGPT. Relative to what I would have been in an alternate universe where it had not come into existence.

Of course, there is no way to construct a counter-factual or to disconfirm that. But I suppose in the long run and on the balance of things, time will tell — If using this technology continues to help me to get what I want out of life and everything good along the way in ways that continue to affirm my sense that this technology is game-changing, cognitively altering, and a complete break in the way that we used to do things., then I suppose that I will not have been entirely wrong in my assessment.

Thank you for reading, and see you in the next one!

V.