“Upload your essay into Turnitin by 11:59pm on Thursday night?

You meant start the essay at 11:57 then submit it at 11:58, am I right?” — Gigachad ChatGPT student.

We begin our discussion by discussing the sweet smell of plagiarism.

It wafts in the air as educators run around like headless chickens, looking here, looking there as they flip through oddly good essays with panicked expressions.

“Was this AI-generated, Bobby?!” says a hapless teacher, staring at a piece of paper that seems curiously bereft of grammatical errors, suspecting that Bobby could never have created something of this caliber.

“No teacher, I just became smart!” Bobby cries, running off into the sunset because he is sad, he is going to become a member of an emo boyband, and he doesn’t want to admit that he generated his homework with ChatGPT.

This smell casts fear and trepidation over every single part of our education system, for it threatens to break it; after all, education is special and it is meant to be sacrosanct — after all, is it not the very same system that is designed to teach humans facts and knowledge and above all, to communicate and collaborate to solve the problems of our era with intelligence, initiative, and drive?

It’s unsurprising that the world of education has flipped out over ChatGPT, because artificial intelligence opens up the very real possibility that schools may be unable to detect it.

Fun and games, right? It’s just a bunch of kids cheating on assignments with artificial intelligence? It’s not going to affect the older generation?

As it turns out, no — that’s not the case. I’ll explain why later.

But before that, let’s talk a bit about the part of our education system that AI is threatening: Essay-writing.

If students simply choose to let their work be completed by artificial intelligence and forget all else, that just means that they’ve forgone the education that they’re supposed to have received, thereby crippling them by an act of personal choice, right…?

But each of us has been a student, and if we have children, our children either will be or have been students too; there is a deep emotional connection that stretches across the entire world when it comes to this.

Therefore, when Princeton University CS student Edward Tian swooped in to offer a solution,it’s not all that surprising that the world flipped.

Enter GPTZero.

Humans deserve the truth. A noble statement and a very bold one for a plagiarism detector, but something that’s a little deeper than most of us would probably imagine.

But consider this.

Not everyone who uses AI text is cheating in the sense of doing something that they are not supposed to and thereby violating rules, therefore the word ‘plagiarism detector’ doesn’t quite or always apply here.

This algorithm, as with other algorithms that attempt to detect AI-generated text, is not just a plagiarism detector that merely serves to catch students in petty acts of cheating —it is an AI detector.

An AI Detector At Work.

So how does it work?

GPTZero assigns a likelihood that a particular text is generated by AI by using two measures:

Perplexity, and Burstiness.

Essentially, in more human language than that which was presented on GPTZero’s website, GPTZero says that…

The less random the text (its ‘perplexity’), the more likely it was generated by an AI.

The less that randomness changes throughout time (its ‘burstiness’), the more likely that the text was generated by AI.

Anyway, GPTZero gives each text a score for perplexity and burstiness, and from there, outputs a probability that given sentences of a text were generated by AI, highlighting the relevant sentences, and easily displays the result to the user.

Alright, sounds great!

Does GPTZero deserve the hype, though?

…Does this actually work?

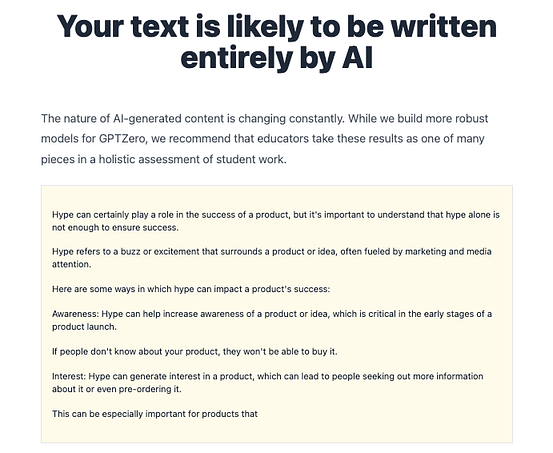

Let’s try it with this pleasant and AI-generated text that is exactly about the importance of hype (lol).

That’s 100% AI-generated and we know that as fact.

…Would we know if we didn’t see it in the ChatGPT terminal window, though?

…Okay, let’s not think about that.

Down the hatch…

…And boom.

As we can see, GPTZero, humanity’s champion, managed to identify that the text that we had generated was written by AI.

Hurrah!!!

Or…?

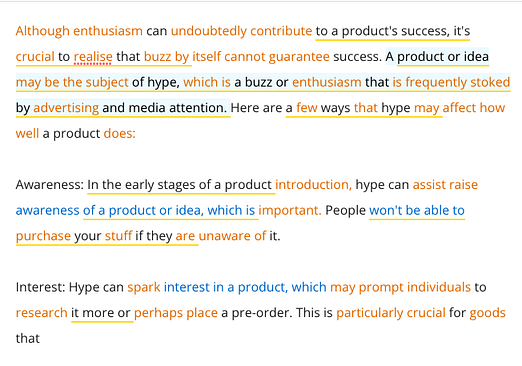

I proceeded to rewrite the essay with another AI software.

…After which GPTZero essentially declared:

So nope, GPTZero can’t detect rewritten texts that were generated with AI — which it should be able to if it truly is an *AI* detector in the best sense — and which in turn suggests that the way that it’s been operationalized has yet to allow it to be the bastion protecting humanity from the incursion of robots into our lives.

It’s not that GPTZero — or even OpenAI’s own AI Text Reviewer, amongst a whole panoply of different AI detectors – are bad or poorly operationalized, by any means. Rather, it’s that the operationalization is supremely difficult because the task is punishingly hard, and that we are unlikely to have a tool that can detect AI-generated text 100% unless we perform watermarking (MIT Technology Review) and we would have to use multiple algorithms to be able to detect text, or come up with alternate measures to do so.

An Arms Race between AI Large Language Models (LLMS) and AI Detectors — and why you should care (even if you’re not a student).

As I’ve mentioned, there is an arms race at hand between AI Large Language Models (LLMs) like ChatGPT, and AI detectors like GPTZero, the consequence of which is likely that the two will compete with one another and each will make progress in its own way, progressing the direction of both technologies forward.

Personally, I think that AI detectors are fighting a losing battle against LLMs for many reasons, but let me not put the cart before the horse — it is a battle to watch, not to predict the outcome of before it’s even begun.

Implications of this strange war:

But why should you care about any of this if you’re not a student? It’s not like you’re going to be looking at essays constantly, right?

Let’s take aside the fact that you’re reading a blog post right now, and let’s also move away purely from the plagiarized essay bit that we’ve been thinking about, as we gravitate towards thinking about how ChatGPT is a language model.

It’s a good bet that you use language everywhere in your life, business, and relationships with other people in order to communicate, coordinate, and everything else.

When we go around on the Internet, it’s not always immediately evident what was AI generated, what was generated by a human or, for that matter, what was inspired by an AI and later followed through by a human.

The whole reason we need something like a plagiarism detector is that we may not even be sure that a particular piece of language (which we most often experience in the form of text) is AI-generated with our own eyes and minds, to the point that we need to literally rely upon statistical patterns in order to evaluate some thing that we are looking at directly in front of us, thereby recruiting our brains as we evaluate the entirety of an output.

The problem is…

Language doesn’t just exist as text.

Language exists as text, yes, but also as speech. Moreover, speech and text are easily convertible to one another — and we know very well what ChatGPT is doing: Generating text.

We now know that there are Text To Speech (TTS) models that generate speech from text. They’re not necessarily all great, but that’s besides the point — it presages the translation from text into voice.

Think about it.

If the voices that are generated by AI become sufficiently realistic-sounding and their intonations (VALL-E, is that you?), how might you know that these voices aren’t real unless there are severe model safeguards that impede the models from functioning as they are supposed to?

Now combine that indistinguishable voice with sophisticated ChatGPT output that can evade any AI detector and in turn may, depending on the features that end up developing, evade your own capacity to tell whether you are even interacting with a human or not.

How would that play out in the metaverse?

How would that play out in the real world, over the phone?

How would you ever know whether anyone that you’re interacting with is real or not? Whether they are sentient?

The battle between AI and AI Detectors is not just a battle over the difference between an A grade and a C grade.

It’s a battle over a future where what’s at stake is identifying what even qualifies as human.