The other day, I used OpenAI’s new Whisper algorithm for the first time…

…Only to realize something very, very strange.

If you’ve not heard about Whisper, it’s OpenAI’s automatic speech recognition (ASR) system, and it’s significantly more accurate compared to something like Siri, which I usually use, or other kinds of technologies.

Anyway, for an upcoming Medium piece, I chose to narrate everything into the voice memo app on my iPhone, in preparation to have it transcribed by Whisper, while I was on a drive from my home to my cello lesson about 15 minutes away.

Whisper did it *almost* perfectly!

Then, I uploaded this into ChatGPT just to format it but didn’t change any of the text.

This was perfect! It was well transcribed, everything looked good, and all that remained was for me to just post the thing, right?

…No.

Because you see, at this point, I started to wonder about a strange question that was starting to brew in my mind:

Was this text AI generated or was it human generated?

I asked several people this question, and almost all of them, said the same thing – it was AI assisted, but the text itself was primarily generated by a human.

To me, that makes a lot of sense because I recited it from my voice, and it wouldn’t make sense if it were considered to be AI generated unless what’s inside my head is not in fact a human brain, but rather some sort of super computer.

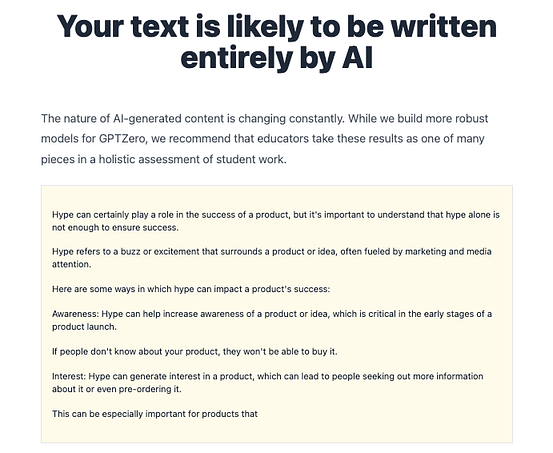

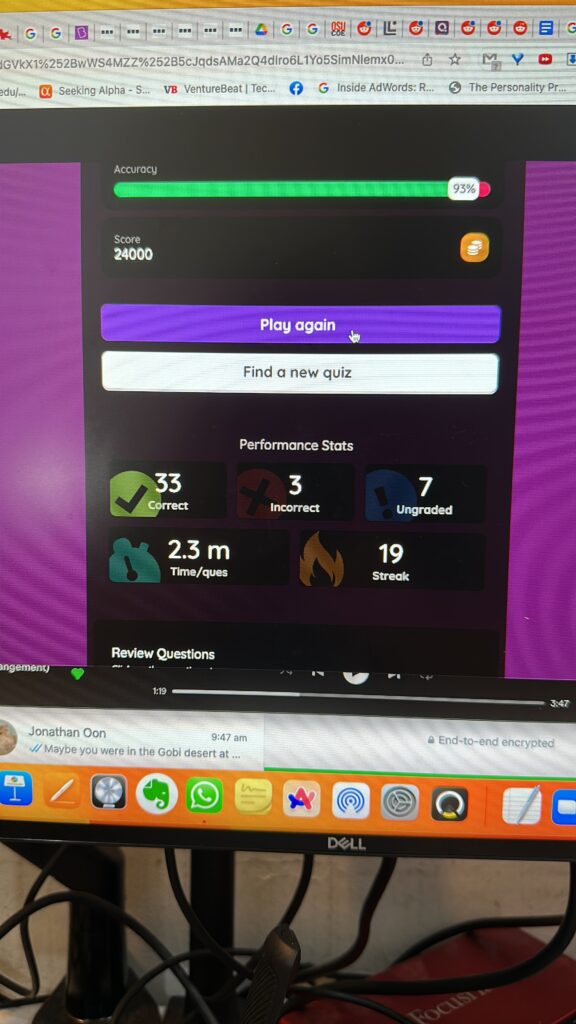

So I decided to just check with GPTZero just to be sure.

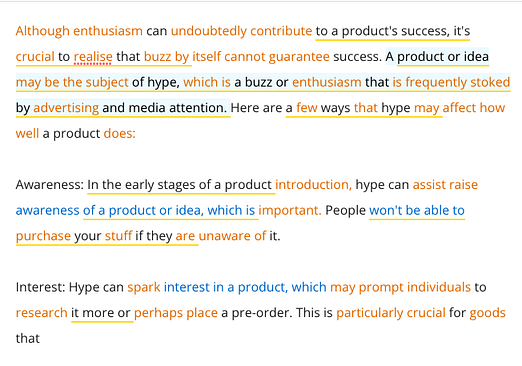

Here’s what GPTZero had to say, as it casually marked the parts of the my essay that it thought were AI-generated in a bright yellow.

I was a little shook.

Essentially, the majority of the text was determined to have been generated by AI.

At first, I thought about a couple of different possibilities. I wonder to myself – was it because I had put the text through ChatGPT? Could it have been that the text had been watermarked or modified in some way that allowed GPTZero to determine that it had been generated by AI?

That didn’t seem to make sense, particularly since OpenAI has not yet implemented watermarks in text . Still, the text definitely wasn’t modified in any way apart from the paragraphing or anything else of that nature.

Therefore, what was the only logical possibility here?

The only possibility is that according to GPTZero, I sounded like an AI.

This made me think quite a fair bit and it just so happened that in the gym I ran into my friend Sandy Clarke, with whom I ended up discussing the matter, (Sandy is wonderful and incredibly humble relative to what he’s achieved – check him out here!) and who suggested that perhaps artificial intelligence speech is just speech of a formal nature and to consider the speeches of JFK and Obama, so I decided to go right ahead and input JFK’s inaugural speech into GPTZero:

…Then I went on to input Obama’s inaugural speech:

… So did this mean that John F Kennedy and Obama were both advanced forms of artificial intelligence sent to planet earth to rule over the most advanced societies in the world, over which no normal human could have presided?

It would be funny if that were true.

At this point, I started to realize that the way that I spoke was just similar to the way that these people speak, which was similar to what GPTZero was identifying as AI-generated.

That’s not all that surprising, since my job is to help students learn how to write effectively, to assist them with their grammar, and also with the way that they use the English language.

But it made me start to wonder – when we interact with artificial intelligence, it’s a new type of interaction where we’re essentially just conversing with and reading from a tool that we are constantly interacting with all the time. Is it all that surprising that that could lead to language change on our parts, and therefore a shift in the way that we think and communicate?

It’s not necessarily going to be the case that humans end up fusing with machine parts, as some movies seem to suggest that we will, but certainly there are going to be changes in our culture as a result of the way that we interact with technology that perhaps aren’t immediately apparent at the outset; what are those changes going to be? It’s not immediately clear what the answer to those questions are.

It was definitely funny to think about this, though, because it leads them into all sorts of interesting questions about sentience, and also about the people that we communicate with – what if the people around us end up adopting artificial intelligence language patterns to the point that we are unable to distinguish the language that is used by artificial intelligence from the language that is used by human beings?

That might be one of the ways in which we become more machinelike as a species, or perhaps not — either way, it was pretty interesting to watch this happen and to ask myself about the ways in which I am being influenced by AI, because we often think of humans and AI as being distinct and different from one another, and that there are clear boundary lines that separate us…

But how are those boundary lines changing over time? The answer to that question is unclear to me.

As we interact with AI, I suppose that we will start to talk a little bit more like AI.

As we move forward in this world, I expect that AI detectors will not really be a meaningful way in which we detect human beings — that our natural instincts of judgment and distinction may become just a little bit finer as we go through life.

On my part, I find it kind of funny that maybe the people reading this piece might think rightfully that I am an AI — a sentient AI, maybe — but an AI for all intents and purposes.

To that I say… Who knows if that could happen to you too?